Python:分析学生数据 Lab (六十九)

Lab:分析学生数据

现在,我们已经准备好将神经网络用于实践。 我们将分析以下加州大学洛杉矶分校的学生录取的数据。

在这个 notebook 中,你将执行神经网络训练的一些步骤,即:

- One-hot 编码数据

- 缩放数据

- 编写反向传播步骤

利用神经网络来预测学生录取情况

在该 notebook 中,我们基于以下三条数据预测了加州大学洛杉矶分校 (UCLA) 的研究生录取情况:

- GRE 分数(测试)即 GRE Scores (Test)

- GPA 分数(成绩)即 GPA Scores (Grades)

- 评级(1-4)即 Class rank (1-4)

数据集来源: http://www.ats.ucla.edu/

加载数据

为了加载数据并很好地进行格式化,我们将使用两个非常有用的包,即 Pandas 和 Numpy。 你可以在这里阅读文档:

# Importing pandas and numpy

import pandas as pd

import numpy as np

# Reading the csv file into a pandas DataFrame

data = pd.read_csv('student_data.csv')

# Printing out the first 10 rows of our data

data[:10]| admit | gre | gpa | rank | |

|---|---|---|---|---|

| 0 | 0 | 380 | 3.61 | 3 |

| 1 | 1 | 660 | 3.67 | 3 |

| 2 | 1 | 800 | 4.00 | 1 |

| 3 | 1 | 640 | 3.19 | 4 |

| 4 | 0 | 520 | 2.93 | 4 |

| 5 | 1 | 760 | 3.00 | 2 |

| 6 | 1 | 560 | 2.98 | 1 |

| 7 | 0 | 400 | 3.08 | 2 |

| 8 | 1 | 540 | 3.39 | 3 |

| 9 | 0 | 700 | 3.92 | 2 |

绘制数据

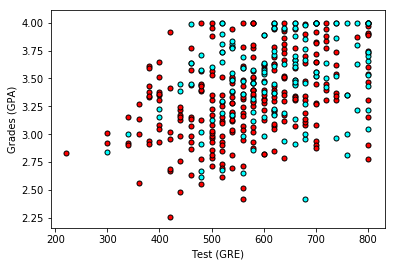

首先让我们对数据进行绘图,看看它是什么样的。为了绘制二维图,让我们先忽略评级 (rank)。

# Importing matplotlib

import matplotlib.pyplot as plt

%matplotlib inline

# Function to help us plot

def plot_points(data):

X = np.array(data[["gre","gpa"]])

#print(X)

y = np.array(data["admit"])

#print(y)

admitted = X[np.argwhere(y==1)]

rejected = X[np.argwhere(y==0)]

plt.scatter([s[0][0] for s in rejected], [s[0][1] for s in rejected], s = 25, color = 'red', edgecolor = 'k')

plt.scatter([s[0][0] for s in admitted], [s[0][1] for s in admitted], s = 25, color = 'cyan', edgecolor = 'k')

plt.xlabel('Test (GRE)')

plt.ylabel('Grades (GPA)')

# Plotting the points

plot_points(data)

plt.show()

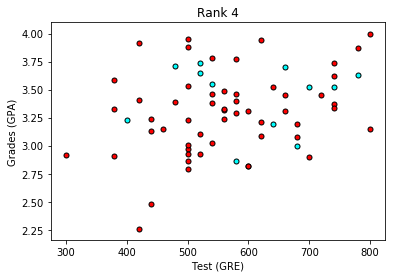

粗略来说,它看起来像是,成绩 (grades) 和测试 (test) 分数高的学生通过了,而得分低的学生却没有,但数据并没有如我们所希望的那样,很好地分离。 也许将评级 (rank) 考虑进来会有帮助? 接下来我们将绘制 4 个图,每个图代表一个级别。

# Separating the ranks

data_rank1 = data[data["rank"]==1]

data_rank2 = data[data["rank"]==2]

data_rank3 = data[data["rank"]==3]

data_rank4 = data[data["rank"]==4]

print(data_rank1.head(5))

print(data_rank2.head(5))

# Plotting the graphs

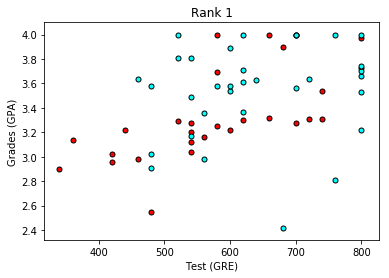

plot_points(data_rank1)

plt.title("Rank 1")

plt.show()

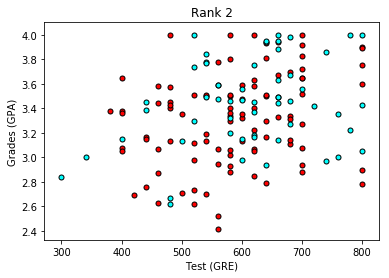

plot_points(data_rank2)

plt.title("Rank 2")

plt.show()

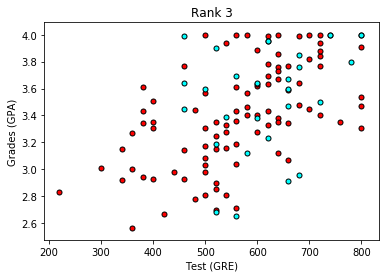

plot_points(data_rank3)

plt.title("Rank 3")

plt.show()

plot_points(data_rank4)

plt.title("Rank 4")

plt.show() admit gre gpa rank

2 1 800 4.00 1

6 1 560 2.98 1

11 0 440 3.22 1

12 1 760 4.00 1

14 1 700 4.00 1

admit gre gpa rank

5 1 760 3.00 2

7 0 400 3.08 2

9 0 700 3.92 2

13 0 700 3.08 2

18 0 800 3.75 2

现在看起来更棒啦,看上去评级越低,录取率越高。 让我们使用评级 (rank) 作为我们的输入之一。 为了做到这一点,我们应该对它进行一次one-hot 编码。

将评级进行 One-hot 编码

我们将在 Pandas 中使用 get_dummies 函数。

# TODO: Make dummy variables for rank

# 横向表拼接(行对齐)https://blog.csdn.net/mr_hhh/article/details/79488445

one_hot_data = pd.concat([data, pd.get_dummies(data['rank'], prefix='rank')], axis=1)

print(one_hot_data.head(5))

# TODO: Drop the previous rank column

one_hot_data = one_hot_data.drop('rank', axis=1)

# Print the first 10 rows of our data

one_hot_data[:10] admit gre gpa rank rank_1 rank_2 rank_3 rank_4

0 0 380 3.61 3 0 0 1 0

1 1 660 3.67 3 0 0 1 0

2 1 800 4.00 1 1 0 0 0

3 1 640 3.19 4 0 0 0 1

4 0 520 2.93 4 0 0 0 1| admit | gre | gpa | rank_1 | rank_2 | rank_3 | rank_4 | |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 380 | 3.61 | 0 | 0 | 1 | 0 |

| 1 | 1 | 660 | 3.67 | 0 | 0 | 1 | 0 |

| 2 | 1 | 800 | 4.00 | 1 | 0 | 0 | 0 |

| 3 | 1 | 640 | 3.19 | 0 | 0 | 0 | 1 |

| 4 | 0 | 520 | 2.93 | 0 | 0 | 0 | 1 |

| 5 | 1 | 760 | 3.00 | 0 | 1 | 0 | 0 |

| 6 | 1 | 560 | 2.98 | 1 | 0 | 0 | 0 |

| 7 | 0 | 400 | 3.08 | 0 | 1 | 0 | 0 |

| 8 | 1 | 540 | 3.39 | 0 | 0 | 1 | 0 |

| 9 | 0 | 700 | 3.92 | 0 | 1 | 0 | 0 |

缩放数据

下一步是缩放数据。 我们注意到成绩 (grades) 的范围是 1.0-4.0,而测试分数 (test scores) 的范围大概是 200-800,这个范围要大得多。 这意味着我们的数据存在偏差,使得神经网络很难处理。 让我们将两个特征放在 0-1 的范围内,将分数除以 4.0,将测试分数除以 800。

# Making a copy of our data

processed_data = one_hot_data[:]

# TODO: Scale the columns

processed_data['gre'] = processed_data['gre'] / 800

processed_data['gpa'] = processed_data['gpa'] / 4.0

# Printing the first 10 rows of our procesed data

processed_data[:10]| admit | gre | gpa | rank_1 | rank_2 | rank_3 | rank_4 | |

|---|---|---|---|---|---|---|---|

| 0 | 0 | 0.475 | 0.9025 | 0 | 0 | 1 | 0 |

| 1 | 1 | 0.825 | 0.9175 | 0 | 0 | 1 | 0 |

| 2 | 1 | 1.000 | 1.0000 | 1 | 0 | 0 | 0 |

| 3 | 1 | 0.800 | 0.7975 | 0 | 0 | 0 | 1 |

| 4 | 0 | 0.650 | 0.7325 | 0 | 0 | 0 | 1 |

| 5 | 1 | 0.950 | 0.7500 | 0 | 1 | 0 | 0 |

| 6 | 1 | 0.700 | 0.7450 | 1 | 0 | 0 | 0 |

| 7 | 0 | 0.500 | 0.7700 | 0 | 1 | 0 | 0 |

| 8 | 1 | 0.675 | 0.8475 | 0 | 0 | 1 | 0 |

| 9 | 0 | 0.875 | 0.9800 | 0 | 1 | 0 | 0 |

将数据分成训练集和测试集

为了测试我们的算法,我们将数据分为训练集和测试集。 测试集的大小将占总数据的 10%。

sample = np.random.choice(processed_data.index, size=int(len(processed_data)*0.9), replace=False)

train_data, test_data = processed_data.iloc[sample], processed_data.drop(sample)

print("Number of training samples is", len(train_data))

print("Number of testing samples is", len(test_data))

print(train_data[:10])

print(test_data[:10])Number of training samples is 360

Number of testing samples is 40

admit gre gpa rank_1 rank_2 rank_3 rank_4

353 0 0.875 0.8800 0 1 0 0

52 0 0.925 0.8425 0 0 0 1

369 0 1.000 0.9725 0 1 0 0

362 0 0.850 0.7850 0 1 0 0

46 1 0.725 0.8650 0 1 0 0

121 1 0.600 0.6675 0 1 0 0

101 0 0.725 0.8925 0 0 1 0

202 1 0.875 1.0000 1 0 0 0

151 0 0.500 0.8450 0 1 0 0

358 1 0.700 0.9225 0 0 1 0

admit gre gpa rank_1 rank_2 rank_3 rank_4

5 1 0.950 0.7500 0 1 0 0

10 0 1.000 1.0000 0 0 0 1

11 0 0.550 0.8050 1 0 0 0

17 0 0.450 0.6400 0 0 1 0

24 1 0.950 0.8375 0 1 0 0

33 1 1.000 1.0000 0 0 1 0

37 0 0.650 0.7250 0 0 1 0

57 0 0.475 0.7350 0 0 1 0

69 0 1.000 0.9325 1 0 0 0

76 0 0.700 0.8400 0 0 1 0将数据分成特征和目标(标签)

现在,在培训前的最后一步,我们将把数据分为特征 (features)(X)和目标 (targets)(y)。

features = train_data.drop('admit', axis=1)

targets = train_data['admit']

features_test = test_data.drop('admit', axis=1)

targets_test = test_data['admit']

print(features[:10])

print(targets[:10]) gre gpa rank_1 rank_2 rank_3 rank_4

353 0.875 0.8800 0 1 0 0

52 0.925 0.8425 0 0 0 1

369 1.000 0.9725 0 1 0 0

362 0.850 0.7850 0 1 0 0

46 0.725 0.8650 0 1 0 0

121 0.600 0.6675 0 1 0 0

101 0.725 0.8925 0 0 1 0

202 0.875 1.0000 1 0 0 0

151 0.500 0.8450 0 1 0 0

358 0.700 0.9225 0 0 1 0

353 0

52 0

369 0

362 0

46 1

121 1

101 0

202 1

151 0

358 1

Name: admit, dtype: int64训练二层神经网络

下列函数会训练二层神经网络。 首先,我们将写一些 helper 函数。

# Activation (sigmoid) function

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def sigmoid_prime(x):

return sigmoid(x) * (1-sigmoid(x))

def error_formula(y, output):

return - y*np.log(output) - (1 - y) * np.log(1-output)误差反向传播

现在轮到你来练习,编写误差项。 记住这是由方程 $$ (y-\hat{y}) $$ 给出的。

# TODO: Write the error term formula

def error_term_formula(y, output):

return (y-output) # Neural Network hyperparameters

epochs = 1000

learnrate = 0.5

# Training function

def train_nn(features, targets, epochs, learnrate):

# Use to same seed to make debugging easier

np.random.seed(42)

n_records, n_features = features.shape

last_loss = None

# Initialize weights

weights = np.random.normal(scale=1 / n_features**.5, size=n_features)

for e in range(epochs):

del_w = np.zeros(weights.shape)

for x, y in zip(features.values, targets):

# Loop through all records, x is the input, y is the target

# Activation of the output unit

# Notice we multiply the inputs and the weights here

# rather than storing h as a separate variable

output = sigmoid(np.dot(x, weights))

# The error, the target minus the network output

error = error_formula(y, output)

# The error term

# Notice we calulate f'(h) here instead of defining a separate

# sigmoid_prime function. This just makes it faster because we

# can re-use the result of the sigmoid function stored in

# the output variable

error_term = error_term_formula(y, output)

# The gradient descent step, the error times the gradient times the inputs

del_w += error_term * x

# Update the weights here. The learning rate times the

# change in weights, divided by the number of records to average

weights += learnrate * del_w / n_records

# Printing out the error on the training set

if e % (epochs / 10) == 0:

out = sigmoid(np.dot(features, weights))

loss = np.mean((out - targets) ** 2)

print("Epoch:", e)

if last_loss and last_loss < loss:

print("Train loss: ", loss, " WARNING - Loss Increasing")

else:

print("Train loss: ", loss)

last_loss = loss

print("=========")

print("Finished training!")

return weights

weights = train_nn(features, targets, epochs, learnrate)Epoch: 0

Train loss: 0.259480332669

=========

Epoch: 100

Train loss: 0.207651606773

=========

Epoch: 200

Train loss: 0.206908198898

=========

Epoch: 300

Train loss: 0.20638022849

=========

Epoch: 400

Train loss: 0.20590160288

=========

Epoch: 500

Train loss: 0.205462049248

=========

Epoch: 600

Train loss: 0.205056408521

=========

Epoch: 700

Train loss: 0.204680361936

=========

Epoch: 800

Train loss: 0.204330297852

=========

Epoch: 900

Train loss: 0.204003215114

=========

Finished training!计算测试 (Test) 数据的精确度

# Calculate accuracy on test data

tes_out = sigmoid(np.dot(features_test, weights))

predictions = tes_out > 0.5

accuracy = np.mean(predictions == targets_test)

print("Prediction accuracy: {:.3f}".format(accuracy))Prediction accuracy: 0.700为者常成,行者常至

自由转载-非商用-非衍生-保持署名(创意共享3.0许可证)