AI For Trading: Project of NLP on Financial Statements (94)

Project 5: NLP on Financial Statements

Instructions

Each problem consists of a function to implement and instructions on how to implement the function. The parts of the function that need to be implemented are marked with a # TODO comment. After implementing the function, run the cell to test it against the unit tests we've provided. For each problem, we provide one or more unit tests from our project_tests package. These unit tests won't tell you if your answer is correct, but will warn you of any major errors. Your code will be checked for the correct solution when you submit it to Udacity.

Packages

When you implement the functions, you'll only need to you use the packages you've used in the classroom, like Pandas and Numpy. These packages will be imported for you. We recommend you don't add any import statements, otherwise the grader might not be able to run your code.

The other packages that we're importing are project_helper and project_tests. These are custom packages built to help you solve the problems. The project_helper module contains utility functions and graph functions. The project_tests contains the unit tests for all the problems.

Install Packages

import sys

!{sys.executable} -m pip install -r requirements.txtRequirement already satisfied: alphalens==0.3.2 in /opt/conda/lib/python3.6/site-packages (from -r requirements.txt (line 1)) (0.3.2)

Requirement already satisfied: ipython-genutils in /opt/conda/lib/python3.6/site-packages (from traitlets>=4.2->IPython>=3.2.3->alphalens==0.3.2->-r requirements.txt (line 1)) (0.2.0)Load Packages

import nltk

import numpy as np

import pandas as pd

import pickle

import pprint

import project_helper

import project_tests

from tqdm import tqdmDownload NLP Corpora

You'll need two corpora to run this project: the stopwords corpus for removing stopwords and wordnet for lemmatizing.

nltk.download('stopwords')

nltk.download('wordnet')[nltk_data] Downloading package stopwords to /root/nltk_data...

[nltk_data] Package stopwords is already up-to-date!

[nltk_data] Downloading package wordnet to /root/nltk_data...

[nltk_data] Package wordnet is already up-to-date!

TrueGet 10ks

We'll be running NLP analysis on 10-k documents. To do that, we first need to download the documents. For this project, we'll download 10-ks for a few companies. To lookup documents for these companies, we'll use their CIK. If you would like to run this against other stocks, we've provided the dict additional_cik for more stocks. However, the more stocks you try, the long it will take to run.

cik_lookup = {

'AMZN': '0001018724',

'BMY': '0000014272',

'CNP': '0001130310',

'CVX': '0000093410',

'FL': '0000850209',

'FRT': '0000034903',

'HON': '0000773840'}

additional_cik = {

'AEP': '0000004904',

'AXP': '0000004962',

'BA': '0000012927',

'BK': '0001390777',

'CAT': '0000018230',

'DE': '0000315189',

'DIS': '0001001039',

'DTE': '0000936340',

'ED': '0001047862',

'EMR': '0000032604',

'ETN': '0001551182',

'GE': '0000040545',

'IBM': '0000051143',

'IP': '0000051434',

'JNJ': '0000200406',

'KO': '0000021344',

'LLY': '0000059478',

'MCD': '0000063908',

'MO': '0000764180',

'MRK': '0000310158',

'MRO': '0000101778',

'PCG': '0001004980',

'PEP': '0000077476',

'PFE': '0000078003',

'PG': '0000080424',

'PNR': '0000077360',

'SYY': '0000096021',

'TXN': '0000097476',

'UTX': '0000101829',

'WFC': '0000072971',

'WMT': '0000104169',

'WY': '0000106535',

'XOM': '0000034088'}Get list of 10-ks

The SEC has a limit on the number of calls you can make to the website per second. In order to avoid hiding that limit, we've created the SecAPI class. This will cache data from the SEC and prevent you from going over the limit.

sec_api = project_helper.SecAPI()With the class constructed, let's pull a list of filled 10-ks from the SEC for each company.

from bs4 import BeautifulSoup

def get_sec_data(cik, doc_type, start=0, count=60):

newest_pricing_data = pd.to_datetime('2018-01-01')

rss_url = 'https://www.sec.gov/cgi-bin/browse-edgar?action=getcompany' \

'&CIK={}&type={}&start={}&count={}&owner=exclude&output=atom' \

.format(cik, doc_type, start, count)

sec_data = sec_api.get(rss_url)

feed = BeautifulSoup(sec_data.encode('ascii'), 'xml').feed

entries = [

(

entry.content.find('filing-href').getText(),

entry.content.find('filing-type').getText(),

entry.content.find('filing-date').getText())

for entry in feed.find_all('entry', recursive=False)

if pd.to_datetime(entry.content.find('filing-date').getText()) <= newest_pricing_data]

return entriesLet's pull the list using the get_sec_data function, then display some of the results. For displaying some of the data, we'll use Amazon as an example.

example_ticker = 'AMZN'

sec_data = {}

for ticker, cik in cik_lookup.items():

sec_data[ticker] = get_sec_data(cik, '10-K')

pprint.pprint(sec_data[example_ticker][:5])[('https://www.sec.gov/Archives/edgar/data/1018724/000101872417000011/0001018724-17-000011-index.htm',

'10-K',

'2017-02-10'),

('https://www.sec.gov/Archives/edgar/data/1018724/000101872416000172/0001018724-16-000172-index.htm',

'10-K',

'2016-01-29'),

('https://www.sec.gov/Archives/edgar/data/1018724/000101872415000006/0001018724-15-000006-index.htm',

'10-K',

'2015-01-30'),

('https://www.sec.gov/Archives/edgar/data/1018724/000101872414000006/0001018724-14-000006-index.htm',

'10-K',

'2014-01-31'),

('https://www.sec.gov/Archives/edgar/data/1018724/000119312513028520/0001193125-13-028520-index.htm',

'10-K',

'2013-01-30')]Download 10-ks

As you see, this is a list of urls. These urls point to a file that contains metadata related to each filling. Since we don't care about the metadata, we'll pull the filling by replacing the url with the filling url.

raw_fillings_by_ticker = {}

for ticker, data in sec_data.items():

raw_fillings_by_ticker[ticker] = {}

for index_url, file_type, file_date in tqdm(data, desc='Downloading {} Fillings'.format(ticker), unit='filling'):

if (file_type == '10-K'):

file_url = index_url.replace('-index.htm', '.txt').replace('.txtl', '.txt')

raw_fillings_by_ticker[ticker][file_date] = sec_api.get(file_url)

print('Example Document:\n\n{}...'.format(next(iter(raw_fillings_by_ticker[example_ticker].values()))[:1000]))Downloading AMZN Fillings: 100%|██████████| 22/22 [00:03<00:00, 6.04filling/s]

Downloading BMY Fillings: 100%|██████████| 27/27 [00:06<00:00, 4.39filling/s]

Downloading CNP Fillings: 100%|██████████| 19/19 [00:05<00:00, 3.78filling/s]

Downloading CVX Fillings: 100%|██████████| 25/25 [00:06<00:00, 3.74filling/s]

Downloading FL Fillings: 100%|██████████| 22/22 [00:03<00:00, 6.80filling/s]

Downloading FRT Fillings: 100%|██████████| 29/29 [00:04<00:00, 6.14filling/s]

Downloading HON Fillings: 100%|██████████| 25/25 [00:07<00:00, 3.47filling/s]

Example Document:

<SEC-DOCUMENT>0001018724-17-000011.txt : 20170210

<SEC-HEADER>0001018724-17-000011.hdr.sgml : 20170210

<ACCEPTANCE-DATETIME>20170209175636

ACCESSION NUMBER: 0001018724-17-000011

CONFORMED SUBMISSION TYPE: 10-K

PUBLIC DOCUMENT COUNT: 92

CONFORMED PERIOD OF REPORT: 20161231

FILED AS OF DATE: 20170210

DATE AS OF CHANGE: 20170209

FILER:

COMPANY DATA:

COMPANY CONFORMED NAME: AMAZON COM INC

CENTRAL INDEX KEY: 0001018724

STANDARD INDUSTRIAL CLASSIFICATION: RETAIL-CATALOG & MAIL-ORDER HOUSES [5961]

IRS NUMBER: 911646860

STATE OF INCORPORATION: DE

FISCAL YEAR END: 1231

FILING VALUES:

FORM TYPE: 10-K

SEC ACT: 1934 Act

SEC FILE NUMBER: 000-22513

FILM NUMBER: 17588807

BUSINESS ADDRESS:

STREET 1: 410 TERRY AVENUE NORTH

CITY: SEATTLE

STATE: WA

ZIP: 98109

BUSINESS PHONE: 2062661000

MAIL ADDRESS:

STREET 1: 410 TERRY AVENUE NORTH

CITY: SEATTLE

STATE: WA

ZIP: 98109

</SEC-HEADER>

<DOCUMENT>

<TYPE>10-K

<SEQUENCE>1

<FILENAME...Get Documents

With theses fillings downloaded, we want to break them into their associated documents. These documents are sectioned off in the fillings with the tags <DOCUMENT> for the start of each document and </DOCUMENT> for the end of each document. There's no overlap with these documents, so each </DOCUMENT> tag should come after the <DOCUMENT> with no <DOCUMENT> tag in between.

Implement get_documents to return a list of these documents from a filling. Make sure not to include the tag in the returned document text.

import re

def get_documents(text):

"""

Extract the documents from the text

Parameters

----------

text : str

The text with the document strings inside

Returns

-------

extracted_docs : list of str

The document strings found in `text`

"""

# write regexes

doc_start_pattern = re.compile(r'<DOCUMENT>')

doc_end_pattern = re.compile(r'</DOCUMENT>')

doc_start_is = [x.end() for x in doc_start_pattern.finditer(text)]

doc_end_is = [x.start() for x in doc_end_pattern.finditer(text)]

extra_docs = []

for doc_start_i, doc_end_i in zip(doc_start_is, doc_end_is):

doc = text[doc_start_i:doc_end_i]

extra_docs.append(doc)

return extra_docs

project_tests.test_get_documents(get_documents)Tests PassedWith the get_documents function implemented, let's extract all the documents.

filling_documents_by_ticker = {}

for ticker, raw_fillings in raw_fillings_by_ticker.items():

filling_documents_by_ticker[ticker] = {}

for file_date, filling in tqdm(raw_fillings.items(), desc='Getting Documents from {} Fillings'.format(ticker), unit='filling'):

filling_documents_by_ticker[ticker][file_date] = get_documents(filling)

print('\n\n'.join([

'Document {} Filed on {}:\n{}...'.format(doc_i, file_date, doc[:200])

for file_date, docs in filling_documents_by_ticker[example_ticker].items()

for doc_i, doc in enumerate(docs)][:3]))Getting Documents from AMZN Fillings: 100%|██████████| 17/17 [00:00<00:00, 83.03filling/s]

Getting Documents from BMY Fillings: 100%|██████████| 23/23 [00:00<00:00, 40.42filling/s]

Getting Documents from CNP Fillings: 100%|██████████| 15/15 [00:00<00:00, 35.94filling/s]

Getting Documents from CVX Fillings: 100%|██████████| 21/21 [00:00<00:00, 25.70filling/s]

Getting Documents from FL Fillings: 100%|██████████| 16/16 [00:00<00:00, 29.16filling/s]

Getting Documents from FRT Fillings: 100%|██████████| 19/19 [00:00<00:00, 27.12filling/s]

Getting Documents from HON Fillings: 100%|██████████| 20/20 [00:00<00:00, 20.94filling/s]

Document 0 Filed on 2017-02-10:

<TYPE>10-K

<SEQUENCE>1

<FILENAME>amzn-20161231x10k.htm

<DESCRIPTION>FORM 10-K

<TEXT>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<he...

Document 1 Filed on 2017-02-10:

<TYPE>EX-12.1

<SEQUENCE>2

<FILENAME>amzn-20161231xex121.htm

<DESCRIPTION>COMPUTATION OF RATIO OF EARNINGS TO FIXED CHARGES

<TEXT>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http:...

Document 2 Filed on 2017-02-10:

<TYPE>EX-21.1

<SEQUENCE>3

<FILENAME>amzn-20161231xex211.htm

<DESCRIPTION>LIST OF SIGNIFICANT SUBSIDIARIES

<TEXT>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/h...Get Document Types

Now that we have all the documents, we want to find the 10-k form in this 10-k filing. Implement the get_document_type function to return the type of document given. The document type is located on a line with the <TYPE> tag. For example, a form of type "TEST" would have the line <TYPE>TEST. Make sure to return the type as lowercase, so this example would be returned as "test".

def get_document_type(doc):

"""

Return the document type lowercased

Parameters

----------

doc : str

The document string

Returns

-------

doc_type : str

The document type lowercased

"""

# TODO: Implement

type_pattern = re.compile(r'<TYPE>[^\n]+')

doc_type = [x[len('<TYPE>'):] for x in type_pattern.findall(doc)]

return doc_type[0].lower()

project_tests.test_get_document_type(get_document_type)Tests PassedWith the get_document_type function, we'll filter out all non 10-k documents.

ten_ks_by_ticker = {}

for ticker, filling_documents in filling_documents_by_ticker.items():

ten_ks_by_ticker[ticker] = []

for file_date, documents in filling_documents.items():

for document in documents:

if get_document_type(document) == '10-k':

ten_ks_by_ticker[ticker].append({

'cik': cik_lookup[ticker],

'file': document,

'file_date': file_date})

project_helper.print_ten_k_data(ten_ks_by_ticker[example_ticker][:5], ['cik', 'file', 'file_date'])[

{

cik: '0001018724'

file: '\n<TYPE>10-K\n<SEQUENCE>1\n<FILENAME>amzn-2016123...

file_date: '2017-02-10'},

{

cik: '0001018724'

file: '\n<TYPE>10-K\n<SEQUENCE>1\n<FILENAME>amzn-2015123...

file_date: '2016-01-29'},

{

cik: '0001018724'

file: '\n<TYPE>10-K\n<SEQUENCE>1\n<FILENAME>amzn-2014123...

file_date: '2015-01-30'},

{

cik: '0001018724'

file: '\n<TYPE>10-K\n<SEQUENCE>1\n<FILENAME>amzn-2013123...

file_date: '2014-01-31'},

{

cik: '0001018724'

file: '\n<TYPE>10-K\n<SEQUENCE>1\n<FILENAME>d445434d10k....

file_date: '2013-01-30'},

]Preprocess the Data

Clean Up

As you can see, the text for the documents are very messy. To clean this up, we'll remove the html and lowercase all the text.

def remove_html_tags(text):

text = BeautifulSoup(text, 'html.parser').get_text()

return text

def clean_text(text):

text = text.lower()

text = remove_html_tags(text)

return textUsing the clean_text function, we'll clean up all the documents.

for ticker, ten_ks in ten_ks_by_ticker.items():

for ten_k in tqdm(ten_ks, desc='Cleaning {} 10-Ks'.format(ticker), unit='10-K'):

ten_k['file_clean'] = clean_text(ten_k['file'])

project_helper.print_ten_k_data(ten_ks_by_ticker[example_ticker][:5], ['file_clean'])Cleaning AMZN 10-Ks: 100%|██████████| 17/17 [00:35<00:00, 2.07s/10-K]

Cleaning BMY 10-Ks: 100%|██████████| 23/23 [01:13<00:00, 3.19s/10-K]

Cleaning CNP 10-Ks: 100%|██████████| 15/15 [00:54<00:00, 3.61s/10-K]

Cleaning CVX 10-Ks: 100%|██████████| 21/21 [01:52<00:00, 5.34s/10-K]

Cleaning FL 10-Ks: 100%|██████████| 16/16 [00:26<00:00, 1.63s/10-K]

Cleaning FRT 10-Ks: 100%|██████████| 19/19 [00:53<00:00, 2.83s/10-K]

Cleaning HON 10-Ks: 100%|██████████| 20/20 [00:59<00:00, 2.98s/10-K]

[

{

file_clean: '\n10-k\n1\namzn-20161231x10k.htm\nform 10-k\n\n\n...},

{

file_clean: '\n10-k\n1\namzn-20151231x10k.htm\nform 10-k\n\n\n...},

{

file_clean: '\n10-k\n1\namzn-20141231x10k.htm\nform 10-k\n\n\n...},

{

file_clean: '\n10-k\n1\namzn-20131231x10k.htm\nform 10-k\n\n\n...},

{

file_clean: '\n10-k\n1\nd445434d10k.htm\nform 10-k\n\n\nform 1...},

]Lemmatize

With the text cleaned up, it's time to distill the verbs down. Implement the lemmatize_words function to lemmatize verbs in the list of words provided.

from nltk.stem import WordNetLemmatizer

from nltk.corpus import wordnet

def lemmatize_words(words):

"""

Lemmatize words

Parameters

----------

words : list of str

List of words

Returns

-------

lemmatized_words : list of str

List of lemmatized words

"""

# TODO: Implement

lemmatized_words = [WordNetLemmatizer().lemmatize(w, pos='v') for w in words]

# print(lemmatized_words)

return lemmatized_words

project_tests.test_lemmatize_words(lemmatize_words)Tests PassedWith the lemmatize_words function implemented, let's lemmatize all the data.

word_pattern = re.compile('\w+')

for ticker, ten_ks in ten_ks_by_ticker.items():

for ten_k in tqdm(ten_ks, desc='Lemmatize {} 10-Ks'.format(ticker), unit='10-K'):

ten_k['file_lemma'] = lemmatize_words(word_pattern.findall(ten_k['file_clean']))

project_helper.print_ten_k_data(ten_ks_by_ticker[example_ticker][:5], ['file_lemma'])Lemmatize AMZN 10-Ks: 100%|██████████| 17/17 [00:04<00:00, 3.5310-K/s]

Lemmatize BMY 10-Ks: 100%|██████████| 23/23 [00:10<00:00, 2.1710-K/s]

Lemmatize CNP 10-Ks: 100%|██████████| 15/15 [00:08<00:00, 1.7110-K/s]

Lemmatize CVX 10-Ks: 100%|██████████| 21/21 [00:10<00:00, 2.0610-K/s]

Lemmatize FL 10-Ks: 100%|██████████| 16/16 [00:04<00:00, 3.8510-K/s]

Lemmatize FRT 10-Ks: 100%|██████████| 19/19 [00:06<00:00, 2.9610-K/s]

Lemmatize HON 10-Ks: 100%|██████████| 20/20 [00:06<00:00, 3.3110-K/s]

[

{

file_lemma: '['10', 'k', '1', 'amzn', '20161231x10k', 'htm', '...},

{

file_lemma: '['10', 'k', '1', 'amzn', '20151231x10k', 'htm', '...},

{

file_lemma: '['10', 'k', '1', 'amzn', '20141231x10k', 'htm', '...},

{

file_lemma: '['10', 'k', '1', 'amzn', '20131231x10k', 'htm', '...},

{

file_lemma: '['10', 'k', '1', 'd445434d10k', 'htm', 'form', '1...},

]Remove Stopwords

from nltk.corpus import stopwords

lemma_english_stopwords = lemmatize_words(stopwords.words('english'))

for ticker, ten_ks in ten_ks_by_ticker.items():

for ten_k in tqdm(ten_ks, desc='Remove Stop Words for {} 10-Ks'.format(ticker), unit='10-K'):

ten_k['file_lemma'] = [word for word in ten_k['file_lemma'] if word not in lemma_english_stopwords]

print('Stop Words Removed')Remove Stop Words for AMZN 10-Ks: 100%|██████████| 17/17 [00:02<00:00, 8.1010-K/s]

Remove Stop Words for BMY 10-Ks: 100%|██████████| 23/23 [00:04<00:00, 5.0410-K/s]

Remove Stop Words for CNP 10-Ks: 100%|██████████| 15/15 [00:03<00:00, 3.9710-K/s]

Remove Stop Words for CVX 10-Ks: 100%|██████████| 21/21 [00:04<00:00, 4.6810-K/s]

Remove Stop Words for FL 10-Ks: 100%|██████████| 16/16 [00:01<00:00, 9.0510-K/s]

Remove Stop Words for FRT 10-Ks: 100%|██████████| 19/19 [00:02<00:00, 6.6010-K/s]

Remove Stop Words for HON 10-Ks: 100%|██████████| 20/20 [00:02<00:00, 7.6110-K/s]

Stop Words RemovedAnalysis on 10ks

Loughran McDonald Sentiment Word Lists

We'll be using the Loughran and McDonald sentiment word lists. These word lists cover the following sentiment:

- Negative

- Positive

- Uncertainty

- Litigious

- Constraining

- Superfluous

- Modal

This will allow us to do the sentiment analysis on the 10-ks. Let's first load these word lists. We'll be looking into a few of these sentiments.

import os

sentiments = ['negative', 'positive', 'uncertainty', 'litigious', 'constraining', 'interesting']

sentiment_df = pd.read_csv(os.path.join('..', '..', 'data', 'project_5_loughran_mcdonald', 'loughran_mcdonald_master_dic_2016.csv'))

sentiment_df.columns = [column.lower() for column in sentiment_df.columns] # Lowercase the columns for ease of use

# Remove unused information

sentiment_df = sentiment_df[sentiments + ['word']]

sentiment_df[sentiments] = sentiment_df[sentiments].astype(bool)

sentiment_df = sentiment_df[(sentiment_df[sentiments]).any(1)]

# Apply the same preprocessing to these words as the 10-k words

sentiment_df['word'] = lemmatize_words(sentiment_df['word'].str.lower())

sentiment_df = sentiment_df.drop_duplicates('word')

sentiment_df.head()| negative | positive | uncertainty | litigious | constraining | interesting | word | |

|---|---|---|---|---|---|---|---|

| 9 | True | False | False | False | False | False | abandon |

| 12 | True | False | False | False | False | False | abandonment |

| 13 | True | False | False | False | False | False | abandonments |

| 51 | True | False | False | False | False | False | abdicate |

| 54 | True | False | False | False | False | False | abdication |

Bag of Words

using the sentiment word lists, let's generate sentiment bag of words from the 10-k documents. Implement get_bag_of_words to generate a bag of words that counts the number of sentiment words in each doc. You can ignore words that are not in sentiment_words.

from collections import defaultdict, Counter

from sklearn.feature_extraction.text import CountVectorizer

def get_bag_of_words(sentiment_words, docs):

"""

Generate a bag of words from documents for a certain sentiment

Parameters

----------

sentiment_words: Pandas Series

Words that signify a certain sentiment

docs : list of str

List of documents used to generate bag of words

Returns

-------

bag_of_words : 2-d Numpy Ndarray of int

Bag of words sentiment for each document

The first dimension is the document.

The second dimension is the word.

"""

# TODO: Implement

# @example https://blog.csdn.net/weixin_38278334/article/details/82320307

cnt_vec = CountVectorizer(vocabulary=sentiment_words)

bag_of_words = cnt_vec.fit_transform(docs).toarray()

return bag_of_words

project_tests.test_get_bag_of_words(get_bag_of_words)Tests PassedUsing the get_bag_of_words function, we'll generate a bag of words for all the documents.

sentiment_bow_ten_ks = {}

for ticker, ten_ks in ten_ks_by_ticker.items():

lemma_docs = [' '.join(ten_k['file_lemma']) for ten_k in ten_ks]

sentiment_bow_ten_ks[ticker] = {

sentiment: get_bag_of_words(sentiment_df[sentiment_df[sentiment]]['word'], lemma_docs)

for sentiment in sentiments}

project_helper.print_ten_k_data([sentiment_bow_ten_ks[example_ticker]], sentiments)[

{

negative: '[[0 0 0 ..., 0 0 0]\n [0 0 0 ..., 0 0 0]\n [0 0 0...

positive: '[[16 0 0 ..., 0 0 0]\n [16 0 0 ..., 0 0 ...

uncertainty: '[[0 0 0 ..., 1 1 3]\n [0 0 0 ..., 1 1 3]\n [0 0 0...

litigious: '[[0 0 0 ..., 0 0 0]\n [0 0 0 ..., 0 0 0]\n [0 0 0...

constraining: '[[0 0 0 ..., 0 0 2]\n [0 0 0 ..., 0 0 2]\n [0 0 0...

interesting: '[[2 0 0 ..., 0 0 0]\n [2 0 0 ..., 0 0 0]\n [2 0 0...},

]Jaccard Similarity

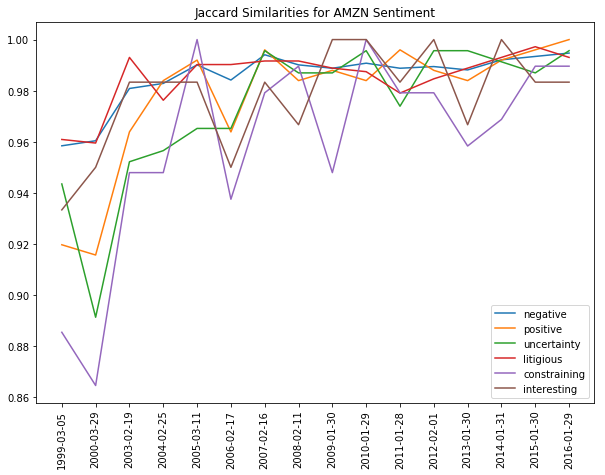

Using the bag of words, let's calculate the jaccard similarity on the bag of words and plot it over time. Implement get_jaccard_similarity to return the jaccard similarities between each tick in time. Since the input, bag_of_words_matrix, is a bag of words for each time period in order, you just need to compute the jaccard similarities for each neighboring bag of words. Make sure to turn the bag of words into a boolean array when calculating the jaccard similarity.

from sklearn.metrics import jaccard_similarity_score

def get_jaccard_similarity(bag_of_words_matrix):

"""

Get jaccard similarities for neighboring documents

Parameters

----------

bag_of_words : 2-d Numpy Ndarray of int

Bag of words sentiment for each document

The first dimension is the document.

The second dimension is the word.

Returns

-------

jaccard_similarities : list of float

Jaccard similarities for neighboring documents

"""

# TODO: Implement

# print(bag_of_words_matrix)

# jaccard_similarity_score

bag_of_words = bag_of_words_matrix.astype(bool)

# print(bag_of_words)

jaccard_similarities = [jaccard_similarity_score(u,v) for u, v in zip(bag_of_words,bag_of_words[1:])]

return jaccard_similarities

project_tests.test_get_jaccard_similarity(get_jaccard_similarity)Tests PassedUsing the get_jaccard_similarity function, let's plot the similarities over time.

# Get dates for the universe

file_dates = {

ticker: [ten_k['file_date'] for ten_k in ten_ks]

for ticker, ten_ks in ten_ks_by_ticker.items()}

jaccard_similarities = {

ticker: {

sentiment_name: get_jaccard_similarity(sentiment_values)

for sentiment_name, sentiment_values in ten_k_sentiments.items()}

for ticker, ten_k_sentiments in sentiment_bow_ten_ks.items()}

project_helper.plot_similarities(

[jaccard_similarities[example_ticker][sentiment] for sentiment in sentiments],

file_dates[example_ticker][1:],

'Jaccard Similarities for {} Sentiment'.format(example_ticker),

sentiments)

TFIDF

using the sentiment word lists, let's generate sentiment TFIDF from the 10-k documents. Implement get_tfidf to generate TFIDF from each document, using sentiment words as the terms. You can ignore words that are not in sentiment_words.

from sklearn.feature_extraction.text import TfidfVectorizer

def get_tfidf(sentiment_words, docs):

"""

Generate TFIDF values from documents for a certain sentiment

Parameters

----------

sentiment_words: Pandas Series

Words that signify a certain sentiment

docs : list of str

List of documents used to generate bag of words

Returns

-------

tfidf : 2-d Numpy Ndarray of float

TFIDF sentiment for each document

The first dimension is the document.

The second dimension is the word.

"""

# TODO: Implement

# @doc TfidfVectorizer https://www.jianshu.com/p/e2a0aea3630c

vectorizer = TfidfVectorizer(vocabulary = sentiment_words)

tfidf = vectorizer.fit_transform(docs).toarray()

return tfidf

project_tests.test_get_tfidf(get_tfidf)Tests PassedUsing the get_tfidf function, let's generate the TFIDF values for all the documents.

sentiment_tfidf_ten_ks = {}

for ticker, ten_ks in ten_ks_by_ticker.items():

lemma_docs = [' '.join(ten_k['file_lemma']) for ten_k in ten_ks]

sentiment_tfidf_ten_ks[ticker] = {

sentiment: get_tfidf(sentiment_df[sentiment_df[sentiment]]['word'], lemma_docs)

for sentiment in sentiments}

project_helper.print_ten_k_data([sentiment_tfidf_ten_ks[example_ticker]], sentiments)[

{

negative: '[[ 0. 0. 0. ..., 0. ...

positive: '[[ 0.22288432 0. 0. ..., 0. ...

uncertainty: '[[ 0. 0. 0. ..., 0.005...

litigious: '[[ 0. 0. 0. ..., 0. 0. 0.]\n [ 0. 0. 0. .....

constraining: '[[ 0. 0. 0. ..., 0. ...

interesting: '[[ 0.01673784 0. 0. ..., 0. ...},

]Cosine Similarity

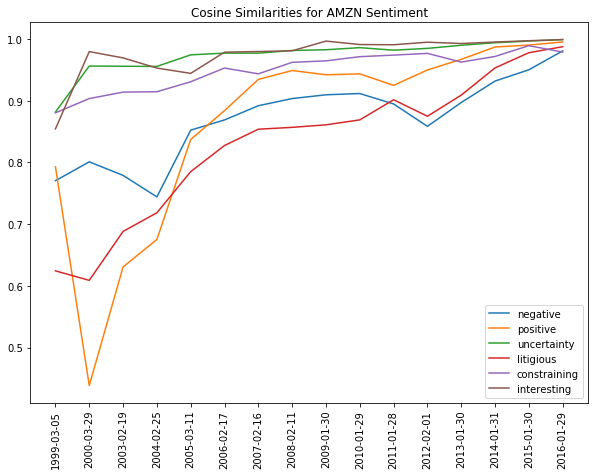

Using the TFIDF values, we'll calculate the cosine similarity and plot it over time. Implement get_cosine_similarity to return the cosine similarities between each tick in time. Since the input, tfidf_matrix, is a TFIDF vector for each time period in order, you just need to computer the cosine similarities for each neighboring vector.

from sklearn.metrics.pairwise import cosine_similarity

def get_cosine_similarity(tfidf_matrix):

"""

Get cosine similarities for each neighboring TFIDF vector/document

Parameters

----------

tfidf : 2-d Numpy Ndarray of float

TFIDF sentiment for each document

The first dimension is the document.

The second dimension is the word.

Returns

-------

cosine_similarities : list of float

Cosine similarities for neighboring documents

"""

# TODO: Implement

# print(tfidf_matrix)

cos_similarity= cosine_similarity(tfidf_matrix[0:], tfidf_matrix[1:])

return cos_similarity[0].tolist()

project_tests.test_get_cosine_similarity(get_cosine_similarity)Tests PassedLet's plot the cosine similarities over time.

cosine_similarities = {

ticker: {

sentiment_name: get_cosine_similarity(sentiment_values)

for sentiment_name, sentiment_values in ten_k_sentiments.items()}

for ticker, ten_k_sentiments in sentiment_tfidf_ten_ks.items()}

project_helper.plot_similarities(

[cosine_similarities[example_ticker][sentiment] for sentiment in sentiments],

file_dates[example_ticker][1:],

'Cosine Similarities for {} Sentiment'.format(example_ticker),

sentiments)

Evaluate Alpha Factors

Just like we did in project 4, let's evaluate the alpha factors. For this section, we'll just be looking at the cosine similarities, but it can be applied to the jaccard similarities as well.

Price Data

Let's get yearly pricing to run the factor against, since 10-Ks are produced annually.

pricing = pd.read_csv('../../data/project_5_yr/yr-quotemedia.csv', parse_dates=['date'])

pricing = pricing.pivot(index='date', columns='ticker', values='adj_close')

pricing.tail(5)| ticker | A | AA | AAAP | AABA | AAC | AADR | AAIT | AAL | AAMC | AAME | ... | ZUMZ | ZUO | ZVZZC | ZVZZCNX | ZX | ZXYZ_A | ZYME | ZYNE | ZZK | ZZZ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | |||||||||||||||||||||

| 2014-01-01 | 39.43238724 | nan | nan | 50.51000000 | 30.92000000 | 36.88899069 | 32.90504993 | 51.89970750 | 310.12000000 | 3.94778326 | ... | 38.63000000 | nan | nan | nan | 1.43000000 | nan | nan | nan | 100.00000000 | 0.50000000 |

| 2015-01-01 | 40.79862571 | nan | 31.27000000 | 33.26000000 | 19.06000000 | 38.06921608 | 30.53000000 | 41.33893271 | 17.16000000 | 4.91270038 | ... | 15.12000000 | nan | nan | nan | 0.79000000 | 14.10000000 | nan | 10.07000000 | 100.00000000 | 1.00000000 |

| 2016-01-01 | 44.93909238 | 28.08000000 | 26.76000000 | 38.67000000 | 7.24000000 | 39.81959334 | nan | 46.08991196 | 53.50000000 | 4.05320152 | ... | 21.85000000 | nan | 100.01000000 | 14.26000000 | 1.19890000 | 8.00000000 | nan | 15.59000000 | 100.00000000 | 25.00000000 |

| 2017-01-01 | 66.65391782 | 53.87000000 | 81.62000000 | 69.85000000 | 9.00000000 | 58.83570736 | nan | 51.80358470 | 81.60000000 | 3.37888199 | ... | 20.82500000 | nan | 100.10000000 | nan | 1.21650000 | nan | 7.59310000 | 12.52000000 | 25.00000000 | 0.01000000 |

| 2018-01-01 | 61.80000000 | 46.88000000 | 81.63000000 | 73.35000000 | 9.81000000 | 52.88000000 | nan | 37.99000000 | 67.90000000 | 2.57500000 | ... | 24.30000000 | 26.74000000 | nan | nan | 1.38000000 | nan | 15.34000000 | 9.17000000 | nan | nan |

5 rows × 11941 columns

Dict to DataFrame

The alphalens library uses dataframes, so we we'll need to turn our dictionary into a dataframe.

cosine_similarities_df_dict = {'date': [], 'ticker': [], 'sentiment': [], 'value': []}

for ticker, ten_k_sentiments in cosine_similarities.items():

for sentiment_name, sentiment_values in ten_k_sentiments.items():

for sentiment_values, sentiment_value in enumerate(sentiment_values):

cosine_similarities_df_dict['ticker'].append(ticker)

cosine_similarities_df_dict['sentiment'].append(sentiment_name)

cosine_similarities_df_dict['value'].append(sentiment_value)

cosine_similarities_df_dict['date'].append(file_dates[ticker][1:][sentiment_values])

cosine_similarities_df = pd.DataFrame(cosine_similarities_df_dict)

cosine_similarities_df['date'] = pd.DatetimeIndex(cosine_similarities_df['date']).year

cosine_similarities_df['date'] = pd.to_datetime(cosine_similarities_df['date'], format='%Y')

cosine_similarities_df.head()| date | ticker | sentiment | value | |

|---|---|---|---|---|

| 0 | 2016-01-01 | AMZN | negative | 0.98065125 |

| 1 | 2015-01-01 | AMZN | negative | 0.95001565 |

| 2 | 2014-01-01 | AMZN | negative | 0.93176312 |

| 3 | 2013-01-01 | AMZN | negative | 0.89701433 |

| 4 | 2012-01-01 | AMZN | negative | 0.85838968 |

Alphalens Format

In order to use a lot of the alphalens functions, we need to aligned the indices and convert the time to unix timestamp. In this next cell, we'll do just that.

import alphalens as al

factor_data = {}

skipped_sentiments = []

for sentiment in sentiments:

cs_df = cosine_similarities_df[(cosine_similarities_df['sentiment'] == sentiment)]

cs_df = cs_df.pivot(index='date', columns='ticker', values='value')

try:

data = al.utils.get_clean_factor_and_forward_returns(cs_df.stack(), pricing, quantiles=5, bins=None, periods=[1])

factor_data[sentiment] = data

except:

skipped_sentiments.append(sentiment)

if skipped_sentiments:

print('\nSkipped the following sentiments:\n{}'.format('\n'.join(skipped_sentiments)))

factor_data[sentiments[0]].head()/opt/conda/lib/python3.6/site-packages/statsmodels/compat/pandas.py:56: FutureWarning: The pandas.core.datetools module is deprecated and will be removed in a future version. Please use the pandas.tseries module instead.

from pandas.core import datetools

Dropped 0.0% entries from factor data: 0.0% in forward returns computation and 0.0% in binning phase (set max_loss=0 to see potentially suppressed Exceptions).

max_loss is 35.0%, not exceeded: OK!

Dropped 0.0% entries from factor data: 0.0% in forward returns computation and 0.0% in binning phase (set max_loss=0 to see potentially suppressed Exceptions).

max_loss is 35.0%, not exceeded: OK!

Dropped 0.0% entries from factor data: 0.0% in forward returns computation and 0.0% in binning phase (set max_loss=0 to see potentially suppressed Exceptions).

max_loss is 35.0%, not exceeded: OK!

Dropped 0.0% entries from factor data: 0.0% in forward returns computation and 0.0% in binning phase (set max_loss=0 to see potentially suppressed Exceptions).

max_loss is 35.0%, not exceeded: OK!

Dropped 0.0% entries from factor data: 0.0% in forward returns computation and 0.0% in binning phase (set max_loss=0 to see potentially suppressed Exceptions).

max_loss is 35.0%, not exceeded: OK!

Dropped 0.0% entries from factor data: 0.0% in forward returns computation and 0.0% in binning phase (set max_loss=0 to see potentially suppressed Exceptions).

max_loss is 35.0%, not exceeded: OK!| 1D | factor | factor_quantile | ||

|---|---|---|---|---|

| date | asset | |||

| 1994-01-01 | BMY | 0.53264104 | 0.44665176 | 1 |

| CVX | 0.22211880 | 0.64730294 | 5 | |

| FRT | 0.17159556 | 0.46696523 | 3 | |

| 1995-01-01 | BMY | 0.32152919 | 0.43571094 | 3 |

| CVX | 0.28478156 | 0.62523346 | 5 |

Alphalens Format with Unix Time

Alphalen's factor_rank_autocorrelation and mean_return_by_quantile functions require unix timestamps to work, so we'll also create factor dataframes with unix time.

unixt_factor_data = {

factor: data.set_index(pd.MultiIndex.from_tuples(

[(x.timestamp(), y) for x, y in data.index.values],

names=['date', 'asset']))

for factor, data in factor_data.items()}Factor Returns

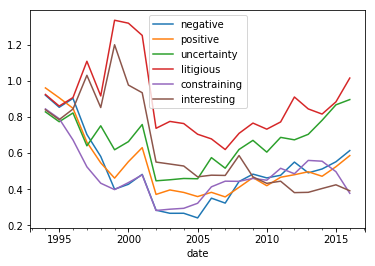

Let's view the factor returns over time. We should be seeing it generally move up and to the right.

ls_factor_returns = pd.DataFrame()

for factor_name, data in factor_data.items():

ls_factor_returns[factor_name] = al.performance.factor_returns(data).iloc[:, 0]

(1 + ls_factor_returns).cumprod().plot()<matplotlib.axes._subplots.AxesSubplot at 0x7ff8dbaf8080>

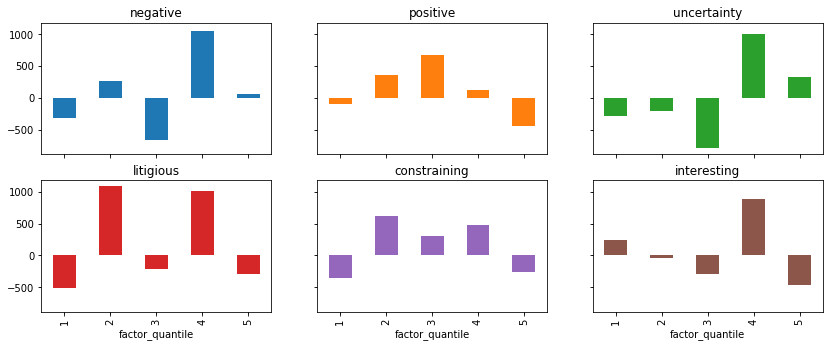

Basis Points Per Day per Quantile

It is not enough to look just at the factor weighted return. A good alpha is also monotonic in quantiles. Let's looks the basis points for the factor returns.

qr_factor_returns = pd.DataFrame()

for factor_name, data in unixt_factor_data.items():

qr_factor_returns[factor_name] = al.performance.mean_return_by_quantile(data)[0].iloc[:, 0]

(10000*qr_factor_returns).plot.bar(

subplots=True,

sharey=True,

layout=(5,3),

figsize=(14, 14),

legend=False)array([[<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dbae4828>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dbd09748>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc9b7780>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc9d29e8>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc97d908>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc97d390>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc995710>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc9c9ef0>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc939438>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc94ba58>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc8e4f28>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc902208>],

[<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc910198>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc8a2f28>,

<matplotlib.axes._subplots.AxesSubplot object at 0x7ff8dc8b3dd8>]], dtype=object)

Turnover Analysis

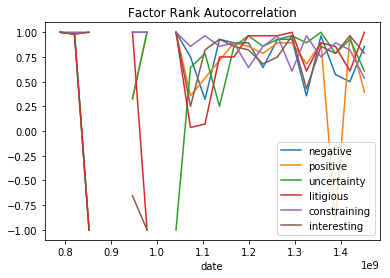

Without doing a full and formal backtest, we can analyze how stable the alphas are over time. Stability in this sense means that from period to period, the alpha ranks do not change much. Since trading is costly, we always prefer, all other things being equal, that the ranks do not change significantly per period. We can measure this with the Factor Rank Autocorrelation (FRA).

ls_FRA = pd.DataFrame()

for factor, data in unixt_factor_data.items():

ls_FRA[factor] = al.performance.factor_rank_autocorrelation(data)

ls_FRA.plot(title="Factor Rank Autocorrelation")<matplotlib.axes._subplots.AxesSubplot at 0x7ff8dcd5a5f8>

Sharpe Ratio of the Alphas

The last analysis we'll do on the factors will be sharpe ratio. Let's see what the sharpe ratio for the factors are. Generally, a Sharpe Ratio of near 1.0 or higher is an acceptable single alpha for this universe.

daily_annualization_factor = np.sqrt(252)

(daily_annualization_factor * ls_factor_returns.mean() / ls_factor_returns.std()).round(2)negative -0.24000000

positive -1.33000000

uncertainty 0.82000000

litigious 1.34000000

constraining -2.99000000

interesting -2.47000000

dtype: float64That's it! You've successfully done sentiment analysis on 10-ks!

为者常成,行者常至

自由转载-非商用-非衍生-保持署名(创意共享3.0许可证)