AI 学习之使用 keras 深度学习框架构建 CNN

Keras是更高层的深度学习框架,它是用python编写的。它可以运行在其它的底层框架上面,包括TensorFlow和CNTK。本文的keras是运行在tensorflow上面的,也就是说,keras内部会调用tensorflow来完成任务。换句话说,就是keras比tensorflow更加方便。

Keras 介绍

Keras 是一个用 Python 编写的高级神经网络 API,它能够以 TensorFlow, CNTK, 或者 Theano 作为后端运行。Keras 的开发重点是支持快速的实验。能够以最小的时延把你的想法转换为实验结果,是做好研究的关键。

Keras官方文档:https://keras.io/zh/

优点

Keras 优先考虑开发人员的经验

-

Keras 是为人类而非机器设计的 API。Keras 遵循减少认知困难的最佳实践: 它提供一致且简单的 API,它将常见用例所需的用户操作数量降至最低,并且在用户错误时提供清晰和可操作的反馈。

-

这使 Keras 易于学习和使用。作为 Keras 用户,你的工作效率更高,能够比竞争对手更快地尝试更多创意,从而帮助你赢得机器学习竞赛。

-

这种易用性并不以降低灵活性为代价:因为 Keras 与底层深度学习语言(特别是 TensorFlow)集成在一起,所以它可以让你实现任何你可以用基础语言编写的东西。特别是,tf.keras 作为 Keras API 可以与 TensorFlow 工作流无缝集成。

安装

首先要安装keras。

1,打开Anaconda prompt

2,执行activate tensorflow命令

3,执行pip install --upgrade keras命令

4,执行pip install --upgrade pydot命令

报错信息处理

注意,这里安装keras,必须和tf有对应的版本,否则会报错:

ModuleNotFoundError: No module named 'tensorflow.python.eager'查看 TensorFlow 和 keras对应版本关系:

https://docs.floydhub.com/guides/environments/

如果版本不匹配,则需卸载掉keras,安装对应的版本,本机tensflow版本为1.2.1,所以keras应该安装 2.0.6

pip uninstall keras

pip install keras==2.0.6三、识别笑脸项目

import numpy as np

from keras import layers

from keras.layers import Input, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D

from keras.layers import AveragePooling2D, MaxPooling2D, Dropout, GlobalMaxPooling2D, GlobalAveragePooling2D

from keras.models import Model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

import pydot

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

from keras.utils import plot_model

from kt_utils import *

import keras.backend as K

K.set_image_data_format('channels_last')

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

%matplotlib inlineUsing TensorFlow backend.

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:458: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint8 = np.dtype([("qint8", np.int8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:459: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint8 = np.dtype([("quint8", np.uint8, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:460: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint16 = np.dtype([("qint16", np.int16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:461: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_quint16 = np.dtype([("quint16", np.uint16, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:462: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

_np_qint32 = np.dtype([("qint32", np.int32, 1)])

/usr/local/lib/python3.6/dist-packages/tensorflow/python/framework/dtypes.py:465: FutureWarning: Passing (type, 1) or '1type' as a synonym of type is deprecated; in a future version of numpy, it will be understood as (type, (1,)) / '(1,)type'.

np_resource = np.dtype([("resource", np.ubyte, 1)])1 - 快乐传递

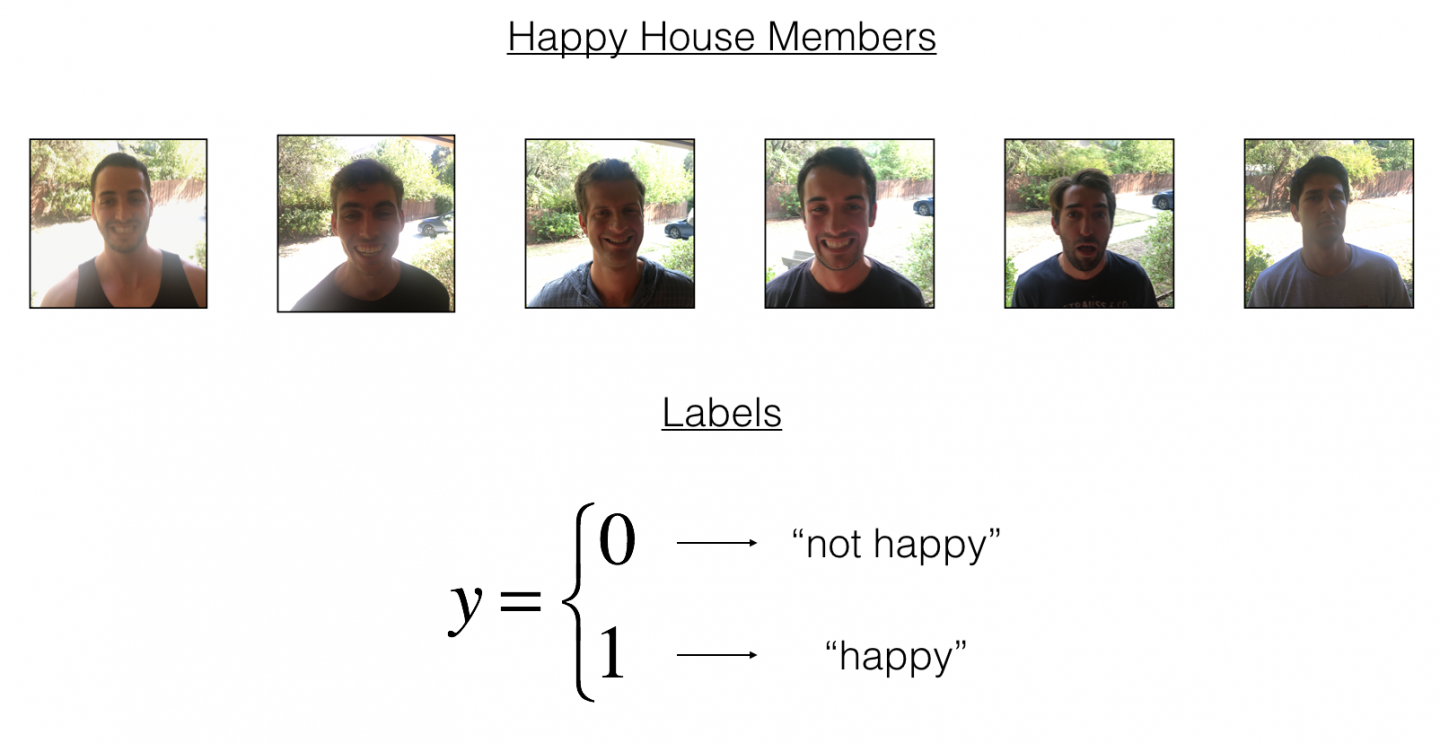

活着就是为了享受生活追求快乐,这就是人生的意义。假设你有一个长假,你决定与你5个好友在一个温馨的小屋里面享受这个假期。

快乐是互相的,如果某个人很忧郁悲伤,那么会坏了整个气氛,大家都玩得不爽。所以为了确保进屋的人的情绪都是快乐的,你决定开发一个人工智能程序来识别门外的人是否快乐,如果门上的摄像头拍到的脸是快乐的,门会自动打开,如果是沮丧脸,那么就不开门。

假设下面是你朋友和你的相片。这些照片在训练集中都被一一标注了,不快乐的脸是0,快乐的脸是1。

# 取出训练数据,并且进行标准化处理。

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset()

X_train = X_train_orig/255.

X_test = X_test_orig/255.

Y_train = Y_train_orig.T

Y_test = Y_test_orig.T

print ("number of training examples = " + str(X_train.shape[0]))

print ("number of test examples = " + str(X_test.shape[0]))

print ("X_train shape: " + str(X_train.shape))

print ("Y_train shape: " + str(Y_train.shape))

print ("X_test shape: " + str(X_test.shape))

print ("Y_test shape: " + str(Y_test.shape))number of training examples = 600

number of test examples = 150

X_train shape: (600, 64, 64, 3)

Y_train shape: (600, 1)

X_test shape: (150, 64, 64, 3)

Y_test shape: (150, 1)2 - 用keras构建模型

keras非常善于快速地构建模型。使用它,可以在很短的时间内构建出一个表现优秀的模型。

下面这段代码就用keras构建了一个模型:

def model(input_shape):

# 定义一个占位符X_input,稍后人脸图片数据会输入到这个占位符中。input_shape中包含了占位符的维度信息.

X_input = Input(input_shape)

# 给占位符矩阵的周边填充0

X = ZeroPadding2D((3, 3))(X_input)

# 构建一个卷积层,并对结果进行BatchNormalization操作,然后送入激活函数

X = Conv2D(32, (7, 7), strides = (1, 1), name = 'conv0')(X)

X = BatchNormalization(axis = 3, name = 'bn0')(X)

X = Activation('relu')(X)

# 构建MAXPOOL层

X = MaxPooling2D((2, 2), name='max_pool')(X)

# 将矩阵扁平化成向量,然后构建一个全连接层

X = Flatten()(X)

X = Dense(1, activation='sigmoid', name='fc')(X)

# 构建一个keras模型实例,后面会通过这个实例句柄来进行模型的训练和预测

model = Model(inputs = X_input, outputs = X, name='HappyModel')

return model有同学可能注意到了,keras编程中喜欢复用变量,这点与我们使用numpy和tensorflow编程时不同。在之前的实战编程中,我们会定义很多个变量,X, Z1, A1, Z2, A2,等等。而在上面的代码中,X作为每一层的输入,然后这一层的输出又会存储到X中。

def HappyModel(input_shape):

X_input = Input(input_shape)

X = ZeroPadding2D((3, 3))(X_input)

X = Conv2D(32, (7, 7), strides=(1, 1), name='conv0')(X)

X = BatchNormalization(axis=3, name='bn0')(X)

X = Activation('relu')(X)

X = MaxPooling2D((2, 2), name='max_pool')(X)

X = Flatten()(X)

X = Dense(1, activation='sigmoid', name='fc')(X)

model = Model(inputs=X_input, outputs=X, name='HappyModel')

return model上面我们定义一个模型实例。一般来说,使用keras框架时,要训练和测试一个模型,需要下面4个步骤:

- 调用上面的函数来创建一个模型实例。

- 调用

model.compile(optimizer = "...", loss = "...", metrics = ["accuracy"])来编译那个模型实例 - 调用

model.fit(x = ..., y = ..., epochs = ..., batch_size = ...)来训练模型实例 - 调用

model.evaluate(x = ..., y = ...)来测试模型

### 创建模型实例

happyModel = HappyModel(X_train.shape[1:])

print(X_train.shape[1:])

print(happyModel)(64, 64, 3)

<keras.engine.training.Model object at 0x7f0e1cd685f8>### 编译模型

happyModel.compile('adam', 'binary_crossentropy', metrics=['accuracy'])### 训练模型。注意,训练可能要花好几分钟,视你的硬件性能而定。训练时逛逛pronhub放松放松

happyModel.fit(X_train, Y_train, epochs=40, batch_size=50)Epoch 1/40

600/600 [==============================] - 11s - loss: 7.6147 - acc: 0.4733

Epoch 2/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 3/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 4/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 5/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 6/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 7/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 8/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 9/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 10/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 11/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 12/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 13/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 14/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 15/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 16/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 17/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 18/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 19/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 20/40

600/600 [==============================] - 10s - loss: 7.9712 - acc: 0.5000

Epoch 21/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 22/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 23/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 24/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 25/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 26/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 27/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 28/40

600/600 [==============================] - 12s - loss: 7.9712 - acc: 0.5000

Epoch 29/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 30/40

600/600 [==============================] - 12s - loss: 7.9712 - acc: 0.5000

Epoch 31/40

600/600 [==============================] - 12s - loss: 7.9712 - acc: 0.5000

Epoch 32/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 33/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 34/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 35/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 36/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 37/40

600/600 [==============================] - 11s - loss: 7.9712 - acc: 0.5000

Epoch 38/40

600/600 [==============================] - 12s - loss: 7.9712 - acc: 0.5000

Epoch 39/40

600/600 [==============================] - 12s - loss: 7.9712 - acc: 0.5000

Epoch 40/40

600/600 [==============================] - 12s - loss: 7.9712 - acc: 0.5000

<keras.callbacks.History at 0x7f0e4c3e19e8>注意,如果你再次执行上面的fit函数,那么keras会在上次训练好的参数值上面继续进行训练。

### 测试模型

preds = happyModel.evaluate(X_test, Y_test, batch_size=32, verbose=1, sample_weight=None)

print()

print ("Loss = " + str(preds[0]))

print ("Test Accuracy = " + str(preds[1]))150/150 [==============================] - 1s

Loss = 7.014649340311686

Test Accuracy = 0.5599999984105428当然,你也可以改一下上面的代码,调整一些超参数的值,来进行不同的探索。

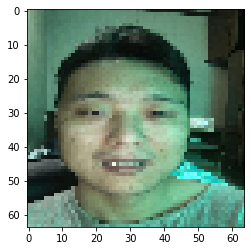

3 - 用自己的图片来测试一下

### 把这个图片换成你自己的,注意图片的大小

# img_path = 'images/my_image.jpg'

img_path = 'images/happy.jpeg'

img = image.load_img(img_path, target_size=(64, 64))

imshow(img)

print(img)

x = image.img_to_array(img)

# print(x)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

print(happyModel.predict(x))

# 输出结果是0表示不开心,是1表示开心<PIL.Image.Image image mode=RGB size=64x64 at 0x7F0E1D01BC50>

[[1.]]

相关文章:

keras Model 1 入门篇

为者常成,行者常至

自由转载-非商用-非衍生-保持署名(创意共享3.0许可证)