AI 量化交易-利用 PCA 使多个因子降维和去除共线性 (四)

这节主要用到的知识点为:scikit-learn中的主成分分析(PCA)的使用。

利用PCA使多个因子降维和去除共线性

%matplotlib inline

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

from sklearn.datasets import load_iris# Iris数据集在模式识别研究领域应该是最知名的数据集了,有很多文章都用到这个数据集。

# 这个数据集里一共包括150行记录,其中前四列为花萼长度,花萼宽度,花瓣长度,花瓣宽度等4个用于识别鸢尾花的属性,

# 第5列为鸢尾花的类别(包括Setosa,Versicolour,Virginica三类)。

# @see https://www.jianshu.com/p/8c5f9f2549c5

data = load_iris() #加载数据

print(type(data))

y = data.target #获取类别数据,这里注意的是已经经过了处理,target里0、1、2分别代表三种类别

X = data.data #获取属性数据

# 打印数据

print('y:',y)

print('X:',X[:3])

# 主成分析doc:https://www.cnblogs.com/pinard/p/6243025.html

# n_components:这个参数可以帮我们指定希望PCA降维后的特征维度数目。最常用的做法是直接指定降维到的维度数目,

# 此时n_components是一个大于等于1的整数。

pca = PCA(n_components=2) #主成分析

#scikit-learn中的主成分分析(PCA)的使用

#@doc https://www.cnblogs.com/eczhou/p/5433856.html

#fit_transform(X):用X来训练PCA模型,同时返回降维后的数据.

reduced_X = pca.fit_transform(X)

<class 'sklearn.utils.Bunch'>

y: [0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2]

X: [[5.1 3.5 1.4 0.2]

[4.9 3. 1.4 0.2]

[4.7 3.2 1.3 0.2]]# 降维后的X

print("reduced_X:\n", reduced_X[:5])reduced_X:

[[-2.68412563 0.31939725]

[-2.71414169 -0.17700123]

[-2.88899057 -0.14494943]

[-2.74534286 -0.31829898]

[-2.72871654 0.32675451]]red_x, red_y = [], []

blue_x, blue_y = [], []

green_x, green_y = [], []

for i in range(len(reduced_X)):

if y[i] == 0:

red_x.append(reduced_X[i][0])

red_y.append(reduced_X[i][1])

elif y[i] == 1:

blue_x.append(reduced_X[i][0])

blue_y.append(reduced_X[i][1])

else:

green_x.append(reduced_X[i][0])

green_y.append(reduced_X[i][1])

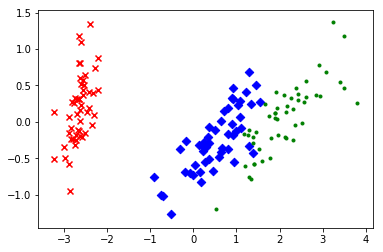

plt.scatter(red_x, red_y, c='r', marker='x')

plt.scatter(blue_x, blue_y, c='b', marker='D')

plt.scatter(green_x, green_y, c='g', marker='.')

plt.show()

import numpy as np

import pandas as pd

from sklearn.decomposition import PCA

## 10个因子,20只股票的数据

data = pd.read_pickle('./data/data.pkl')

data| amt | close_adj | free_float_cap | high_adj | low_adj | open_adj | pe_ttm | s_val_pb_new | volume | vwap_adj | |

|---|---|---|---|---|---|---|---|---|---|---|

| 000001.SZ | 708001.802 | 1101.920280 | 7.762497e+10 | 1104.080908 | 1085.715570 | 1092.197454 | 7.0569 | 0.7958 | 696364.55 | 1098.367599 |

| 000008.SZ | 95248.208 | 90.435282 | 6.529874e+09 | 90.435282 | 88.653306 | 89.321547 | 12.8967 | 1.6300 | 237609.26 | 89.290512 |

| 000009.SZ | 53761.994 | 32.916633 | 7.795625e+09 | 32.916633 | 32.401160 | 32.769355 | 187.1584 | 1.8881 | 121099.81 | 32.691872 |

| 000011.SZ | 32033.160 | 34.072514 | 1.421200e+09 | 34.247604 | 33.442190 | 33.582262 | 30.3630 | 2.0098 | 33109.00 | 33.880129 |

| 000012.SZ | 25728.498 | 99.003723 | 7.073757e+09 | 99.241142 | 97.816628 | 98.528885 | 20.4627 | 1.3331 | 61914.45 | 98.659267 |

| 000014.SZ | 27710.206 | 52.573347 | 1.300307e+09 | 52.627158 | 51.766182 | 52.142859 | 361.7140 | 2.6592 | 28548.15 | 52.231542 |

| 000016.SZ | 25488.929 | 73.895515 | 3.744168e+09 | 74.318985 | 72.836840 | 73.260310 | 1.5684 | 1.0484 | 73253.56 | 73.674213 |

| 000017.SZ | 56370.472 | 12.957441 | 1.156651e+09 | 13.064749 | 12.555036 | 12.662344 | 669.4724 | 156.8050 | 117229.72 | 12.899891 |

| 000020.SZ | 11288.473 | 19.042066 | 7.009443e+08 | 19.147174 | 18.779296 | 19.007030 | 2739.5829 | 9.5836 | 10429.57 | 18.960654 |

| 000021.SZ | 46512.091 | 85.769560 | 4.388595e+09 | 86.737926 | 84.524518 | 84.524518 | 17.7767 | 1.4760 | 75085.47 | 85.694205 |

| 000023.SZ | 46699.777 | 34.231440 | 1.091233e+09 | 34.311048 | 33.170000 | 33.461896 | 245.6557 | 4.3039 | 36694.05 | 33.771832 |

| 000025.SZ | 74855.544 | 51.607548 | 1.641907e+09 | 52.285356 | 50.967396 | 51.099192 | 131.2186 | 8.1558 | 27277.20 | 51.668800 |

| 000026.SZ | 23458.190 | 57.365100 | 1.511165e+09 | 57.585735 | 56.261925 | 56.261925 | 20.5749 | 1.3414 | 30146.25 | 57.228763 |

| 000027.SZ | 20248.221 | 71.280909 | 5.687565e+09 | 71.415148 | 70.475475 | 71.012431 | 38.3097 | 0.9983 | 38347.88 | 70.880084 |

| 000028.SZ | 71935.501 | 152.677618 | 4.802789e+09 | 154.512404 | 147.524602 | 154.512404 | 14.1699 | 1.5199 | 18637.92 | 150.672290 |

| 000030.SZ | 12359.269 | 12.983048 | 2.242071e+09 | 13.079696 | 12.821968 | 12.821968 | 8.6033 | 1.1840 | 30697.56 | 12.970614 |

## 主成分

pca = PCA(n_components=5)

new_factor = pca.fit_transform(data)

new_factor = pd.DataFrame(new_factor,index=data.index)new_factor| 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| 000001.SZ | 6.377510e+10 | 485942.984166 | -54392.086467 | 56.132720 | -209.499221 |

| 000002.SZ | 1.264712e+11 | -267580.947390 | 25752.104230 | 27.287902 | 140.622772 |

| 000004.SZ | -1.300303e+10 | -85789.684193 | -5983.331038 | -195.271656 | -0.051523 |

| 000005.SZ | -1.137258e+10 | 291062.410361 | 74031.002966 | 69.502317 | 368.118970 |

| 000006.SZ | -9.210636e+09 | -25377.513582 | 7956.062376 | -251.674600 | 142.337150 |

| 000008.SZ | -7.319989e+09 | 138052.322886 | 41383.149203 | -111.247787 | 75.656826 |

| 000009.SZ | -6.054238e+09 | 9540.621741 | 27680.113903 | -9.510281 | -351.948397 |

| 000011.SZ | -1.242866e+10 | -47538.092151 | -15001.122936 | -285.435248 | 13.547173 |

| 000012.SZ | -6.776106e+09 | -52389.487547 | 22431.518913 | -214.884139 | -287.987735 |

| 000014.SZ | -1.254956e+10 | -53006.156885 | -13706.803940 | 45.134073 | 46.866296 |

| 000016.SZ | -1.010570e+10 | -26360.473236 | 17114.990590 | -242.171368 | -92.988084 |

| 000017.SZ | -1.269321e+10 | 39478.461145 | 1966.963789 | 421.307754 | 121.991538 |

| 000020.SZ | -1.314892e+10 | -73800.555787 | -9619.622189 | 2420.280675 | -36.415473 |

| 000021.SZ | -9.461268e+09 | -18028.951981 | 1485.992029 | -248.465245 | -21.433882 |

| 000023.SZ | -1.275863e+10 | -35912.823909 | -27361.886965 | -88.246114 | 105.805322 |

| 000025.SZ | -1.220796e+10 | -33734.443465 | -54860.817096 | -249.144815 | 225.648439 |

| 000026.SZ | -1.233870e+10 | -54602.778961 | -8514.546041 | -286.887200 | 19.806017 |

| 000027.SZ | -8.162298e+09 | -69072.328323 | 11744.352752 | -226.614528 | -274.577682 |

| 000028.SZ | -9.047074e+09 | -58038.368334 | -46036.817011 | -353.092322 | 196.947300 |

| 000030.SZ | -1.160779e+10 | -62844.194555 | 3930.782933 | -277.000138 | -182.445808 |

相关文章:scikit-learn中的主成分分析(PCA)的使用

为者常成,行者常至

自由转载-非商用-非衍生-保持署名(创意共享3.0许可证)